Looking for the best trending Tweet?

by

Tweet Hunter

Here are the top trending topics from the last 7 days

Tweet Hunter analyzes huge number of tweets and tries to extract the most trending topics so you always know what to tweet about to stay hot.

Kendrick Lamar and Drake: The Rap Battle of the Decade In the world of hip-hop, few rivalries have captured the attention of fans and critics alike as much as the ongoing battle between Kendrick Lamar and Drake. This feud has become the stuff of legend, with both artists dropping diss tracks that are as clever as they are cutting, showcasing their lyrical prowess and deep understanding of the

(kendrick, drake, the, to, is, and, lamar, kendrick lamar)

Best tweets on this topic

drake really sat in the studio for 24 hours just to say "if im a liar... why arent my pants on fire?"

I only want drake to keep replying so I can listen to more kendrick songs

𝕺 𝕮𝖔𝖗𝖛𝖔

@visecsKendrick Lamar got too much information son. J. Cole DEFINITELY dropped out the beef before he exposed his dreads are fake, he hiding 3 kids and he got a gluten allergy or some shit lmaooo

Kids and Their School Life

(kids, to, the, their, is, and, school, in)

Best tweets on this topic

A Kwanyama

@AlexiaSchlechteI wanna propose a bill that keeps children off social media until the age of 18. Parents can’t post their kids either.

Football Tweet ⚽

@Football__Tweet🗣️ Gabriel Batistuta: "One of my children works in a printing shop. People often say 'Bati's son works at a photocopier' as if he is doing something unworthy... I could happily give each of my children a car, but I don't know if they would be happy, I don't know how long that happiness would last. Because inside, when they get in the car, they know that it's not their car, but their father's. I understand that they don't have the same pleasure when they drive a "normal" car, but at least they will say to themselves: 'I have this car because I deserved it.' For me, having my children work gives them dignity.” 🇦🇷🙏

Àbíkẹ́

@gamvchiraiSo Norway banned smartphones in schools and 3 years later, girls’ GPAs are up, their visits to mental health professionals are down 60% and bullying in both boys and girls is down 43-46%. That’s wild

AI Integration and Evolution in Technology and Society The landscape of artificial intelligence (AI) is rapidly evolving, influencing various aspects of technology and daily life. From Apple's potential AI advancements in iPhone and iOS to the challenges with Siri, the journey of AI integration presents a mixed bag of progress and hurdles. The historical CUDA/C++ origins of Deep Learning, highlighted by the ImageNet/AlexNet breakthrough in

(ai, the, to, and, of, for, is, on)

Best tweets on this topic

Marques Brownlee

@MKBHDOn one hand: It seems like it's only a matter of time before Apple starts making major AI-related moves around the iPhone and iOS and buries these AI-in-a-box gadgets extremely quickly On the other hand: Have you used Siri lately?

Anthropic

@AnthropicAIThe Claude iOS app has arrived. The power of frontier intelligence is now in your back pocket. Download now on the App Store: https://apps.apple.com/us/app/claude/id6473753684 … pic.twitter.com/yabpNuziQz

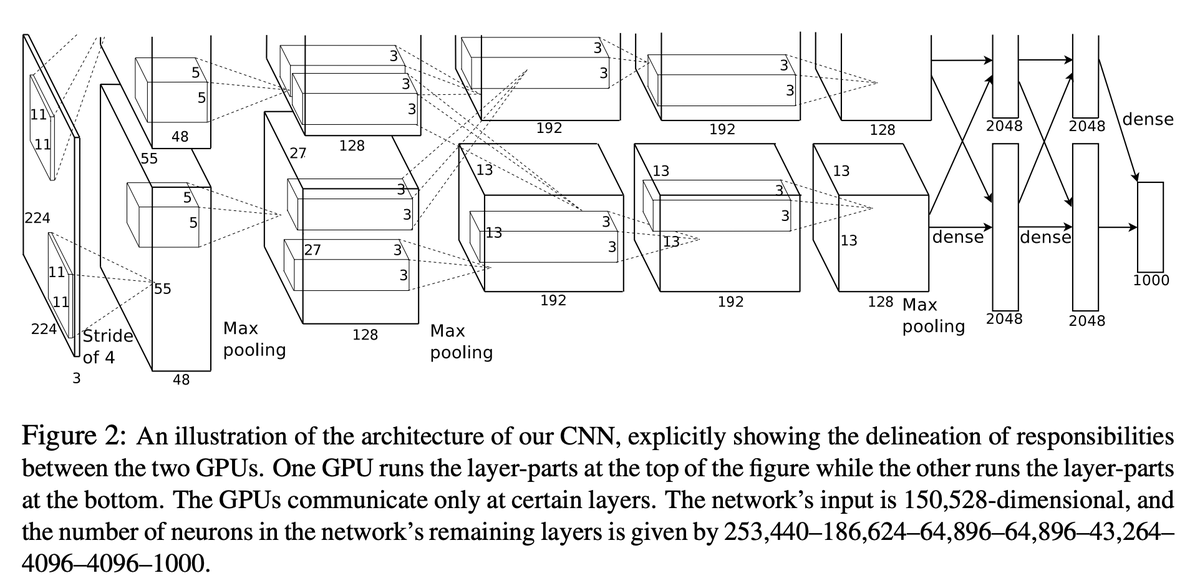

Andrej Karpathy

@karpathy# CUDA/C++ origins of Deep Learning Fun fact many people might have heard about the ImageNet / AlexNet moment of 2012, and the deep learning revolution it started. https://t.co/2xjLWODMOf What's maybe a bit less known is that the code backing this winning submission to the contest was written from scratch, manually in CUDA/C++ by Alex Krizhevsky. The repo was called cuda-convnet and it was here on Google Code: https://t.co/ch137VSYZ4 I think Google Code was shut down (?), but I found some forks of it on GitHub now, e.g.: https://t.co/zYhzdUxoEN This was among the first high-profile applications of CUDA for Deep Learning, and it is the scale that doing so afforded that allowed this network to get such a strong performance in the ImageNet benchmark. Actually this was a fairly sophisticated multi-GPU application too, and e.g. included model-parallelism, where the two parallel convolution streams were split across two GPUs. You have to also appreciate that at this time in 2012 (~12 years ago), the majority of deep learning was done in Matlab, on CPU, in toy settings, iterating on all kinds of learning algorithms, architectures and optimization ideas. So it was quite novel and unexpected to see Alex, Ilya and Geoff say: forget all the algorithms work, just take a fairly standard ConvNet, make it very big, train it on a big dataset (ImageNet), and just implement the whole thing in CUDA/C++. And it's in this way that deep learning as a field got a big spark. I recall reading through cuda-convnet around that time like... what is this :S Now of course, there were already hints of a shift in direction towards scaling, e.g. Matlab had its initial support for GPUs, and much of the work in Andrew Ng's lab at Stanford around this time (where I rotated as a 1st year PhD student) was moving in the direction of GPUs for deep learning at scale, among a number of parallel efforts. But I just thought it was amusing, while writing all this C/C++ code and CUDA kernels, that it feels a bit like coming back around to that moment, to something that looks a bit like cuda-convnet.

Trading Insights and Wisdom for Aspiring Traders

(trading, you, your, the, and, to, trader, on)

Best tweets on this topic

Anthony Crudele

@AnthonyCrudeleNo trading for me today, just watched the action on the first day of the month and FOMC. Confirmed my thoughts on the current market. It's a two-way tape. No one has the upper hand. The market will hunt stops. Opportunities on both sides. Key is to be small and smart. Patience.

Once you finally find your model... Trading will feel like an illegal money printer. You know when to execute, and when to sit back. You know what to look for, and when to look for it. You know how much to risk, and on which accounts. Cheatcode.

Tony Trades

@ScarfaceTrades_I made $100k in April trading. You want to know what I did? - I took 1 setup ONLY for 30 days. - I traded the same 1-3 tickers all month. - I took 2 trades on average daily. - I followed my plan for every trade. That’s it. Stop over complicating it, simplicity is key.

How to Gain Followers and Make an Impact on Social Media

(followers, to, the, you, my, in, for, days)

Best tweets on this topic

cillia Baby 💕

@bigprissy_+2347032661011 please everybody tell her to unblock me I wasn't cheating, I was just sleeping 😭💔🤌

Alma🌿🍎

@AlmaKRowannope, deleted, not in a mood for drama 😒 Anyway, 500 or 50 000, be the change you want to see in the world You don't need to wait for 100k follows to help smaller accounts, you can do it *now*, with your 150.

Want to drive more opportunities from X?

Content Inspiration, AI, scheduling, automation, analytics, CRM.

Get all of that and more in Tweet Hunter.

Try Tweet Hunter for free